AI transparency labels for everyday use

I've been thinking about AI ethics and transparency, and how not knowing that something was generated by AI can have draw backs in everyday work situations. So I've created some "AI transparency labels" you can use to be up front about your usage (or when you haven't).

Recently a colleague shared something with me for a project. Something felt off. They’re super smart and this piece of work had some glaring flaws that you’d only spot if you had the right context.

Turns out, it was something that was generated by AI. There’s nothing inherently wrong with that and all the intentions were right, but not explicitly stating this caused more noise than it needed to.

This got me thinking back to a podcast* I listened to on the topic of AI ethics and transparency… How might this situation have been different if there was more transparency about the use of AI in this context?

In addition to this, I’m seeing more and more posts on LinkedIn which feel like a prompt > copy & paste job. It’s making me often question “is this you? are you actually an expert? or is this just GPT?”

I’ve now started to put content through AI detection tools like Pangram to try to understand whether it’s 100% generated, co-created or human made.

So I’m going to start to try to experiment with AI transparency labels in the content I create and the work I do to see how others react.

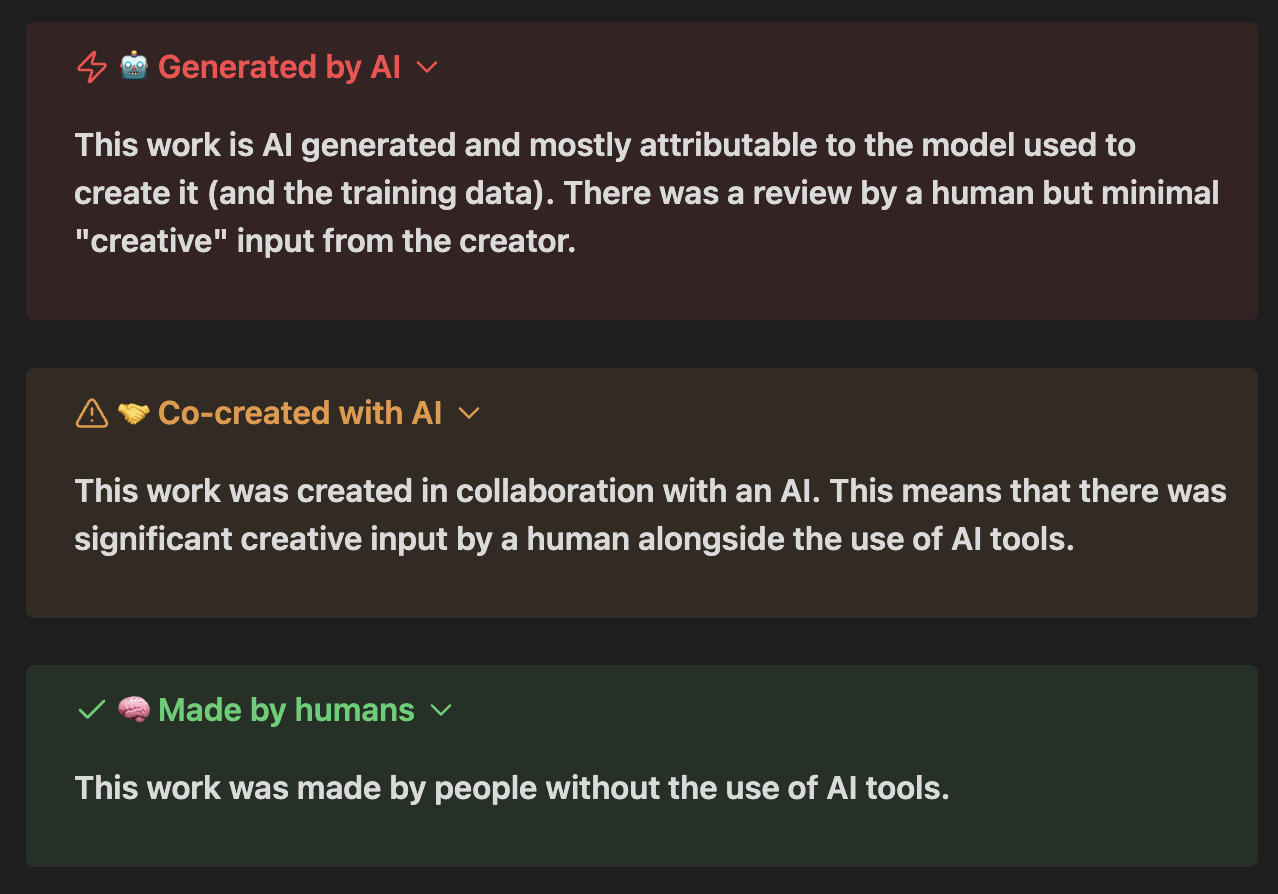

Here’s my first stab at them

This is obviously not a perfect solution but a start

• Relies on honesty • Where’s the line between co-created and fully AI generated • How do you verify this

Agree with Steven Bartlett’s recent post that these LLMs are starting to make people feel inauthentic and you standout if you still sound like a human.

_* I think it was Slo Mo podcast but struggling to find the original author. _

Co-created with AI

This blog post was created in collaboration with an AI. This means that there was significant creative input by a human alongside the use of AI tools.

- This post was written by me without the aid of AI

- I used ChatGPT to help me think through the approach to labels.

- I used Perplexity and Gemini to try to find the podcast the guest that talked about nutrition style labels for AI (which was a big inspiration) but I've struggled to find them